The scale of the market for generative AI, which gained momentum with the November 2022 release of ChatGPT, is expected to grow in Japan by an average of 47.2% per year between now and 2030, with demand expanding to 1.8 trillion yen.※ But in the wake of 2023, which saw the emergence of diverse generative AI solutions and their introduction within companies, what changes can we expect in 2024? Nomura Research Institute (NRI) has done a deep investigation and analysis of the impact that advances in generative AI will have on work and future visions in six major industries: manufacturing; finance; distribution/retail; advertising; entertainment; and government. We spoke with Junichi Shiozaki of the Center for Strategic Management and Innovation, Yoshiaki Nagaya and Takashi Sagimori of the DX Platform Division, and Yoko Matsuzaki of NRI Digital, who shared their expertise on these topics in a discussion of generative AI’s technological progress and accompanying challenges, as well as its outlook going forward.

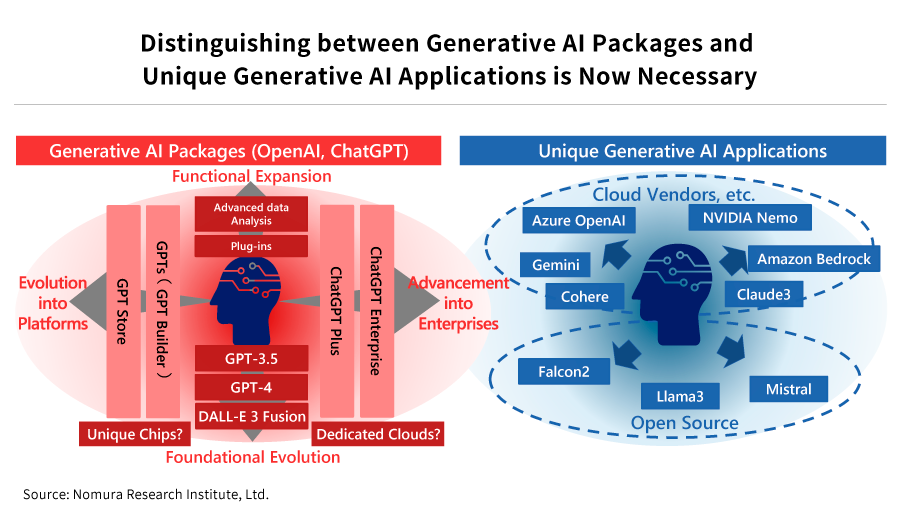

Advancement toward “generative AI packages” and “unique generative applications”

Up until 2023, the primary generative AI issues from a corporate perspective were improving the

performance of the underlying models and realizing large-scale AI platforms. In 2024, however,

breathtaking improvements in discrimination and competitiveness will be achieved thanks to practical

software applications, and it will be essential to improve AI’s reliability, expand its

functionality, improve app productivity, and acquire security-related technologies. Generative AI can

now be said to have shifted from “trial” to “utilization”.

ChatGPT, the spark that ignited the AI revolution, has since undergone a variety of functional

additions. “GPT-4”, which first appeared in July 2023, is now capable of receiving input of

a far greater number of tokens and even of incorporating external files. In addition, November 2023 saw

the appearance of “GPTs”, which made it possible to customize GPT by using conversation to

develop unique applications, for example. This has made it possible to easily call up a GPT that is

attuned to specific tasks or dialogues, as well as to publicly release modifications of GPT and provide

them to other users.

In February 2024, a “Memory” function for storing conversation histories with users was added and testing was begun. This function enabled ChatGPT to refer to dialogue histories with users and utilize past commands and dialogue outcomes, thus achieving a personalization of ChatGPT rooted in dialogue histories. Through these functional additions, ChatGPT is evolving from a simple chat module to a “generative AI package” that, when introduced, will make it easy to utilize the latest generative AI functions.

Meanwhile, the pursuit of a uniqueness that cannot be realized with existing packaged models requires “unique generative models”. As demand shifts in this direction, attentions have focused on a process called “fine-tuning”, in which pre-trained models can be tailored to specific tasks and minutely adjusted. Recently, unique generative AIs that use open source models or cloud vendor technologies, such as “Llama3” and “Mistral”, have begun to emerge on the scene. These open-source models use fine-tuning to modify outputs into unique forms, but they are also capable of using continuous pre-training to acquire new knowledge, making them well suited to industry-specific applications. In addition, because open-source models can be executed in local, secure environments, they can also be expected to be used by companies that handle confidential information.

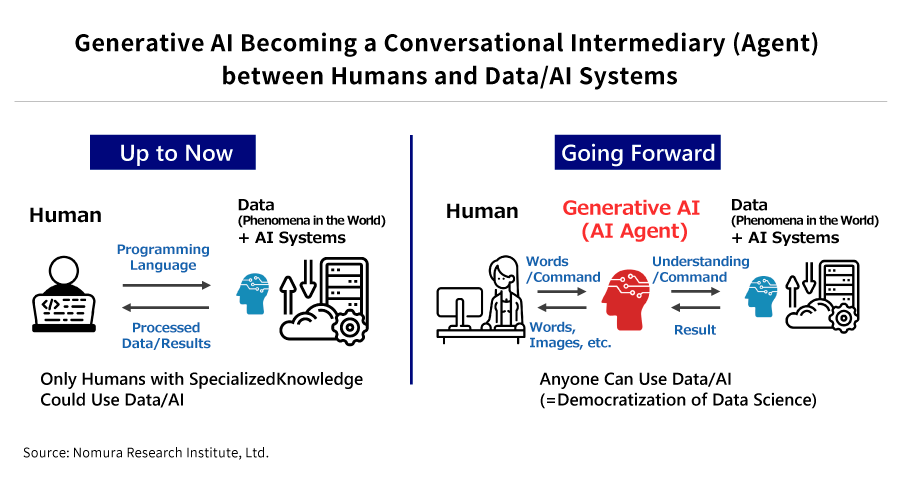

The spread of “AI agents” for ascertaining conditions and taking optimal actions

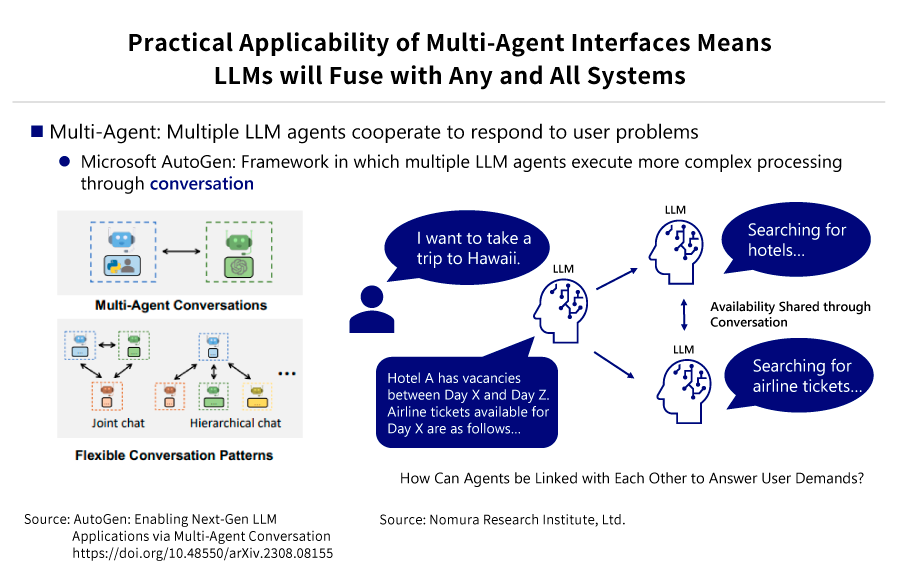

2024 is also likely to see the increasing use of AI agents that employ generative AI. An AI agent is an autonomous system in which generative AI acts as a conversational intermediary (agent) between humans and data/AI systems, and which even without detailed instructions will ascertain conditions and take optimal actions in pursuit of a goal. In conversational UIs that employ agents, these AI agents could, in the future, replace the conventional UIs and become entryways to a variety of services for business and everyday life. Multiple AI agents with different specialties and values will become intermediaries for services, appropriately interpreting user desires and communicating them to systems.

The era may be approaching where, for instance, in response to a vague objective such as “I want to go on vacation”, an AI agent capable of searching for hotels and an AI agent capable of searching for airline tickets will be able to have a conversation and suggest an optimal plan without detailed commands based on user preferences.

With a view to performance improvement, MoE (Mixture of Experts), which combines multiple neural networks and links models to improve performance, has begun to be utilized. Methods of networking AI to derive higher-quality responses are also under examination. Interactions between models can be expected to produce human-like systems in which “words” are used instead of mechanical data transmission.

Generative AI is also becoming capable of recognizing audio, images, and video in addition to text information. This means that, for example, e-commerce websites could become capable of executing tasks while extracting images in real time in response to commands given using information consisting only of text or audio. This multimodalization, in which multiple types of data are processed simultaneously, looks set to continue growing in 2024 and beyond.

Casting light on security and data use issues

Generative AI’s integration into society also poses new threats. The most serious of these is the

risk of data breaches. Recent years have witnessed many incidents where confidential information was

exposed because of attacks exploiting vulnerabilities in the memory or development functions built into

generative AI. As operational utilization of generative AI continues to expand in Japan, these security

concerns will need to be eliminated.

Another risk that threatens to erode trust in generative AI is hallucination, in which models produce

factually incorrect answers. A promising fix for this is a technology known as RAG (Retrieval-Augmented

Generation), which is said to be able to suppress hallucinations by referencing outside data for the

knowledge required to respond to users. RAG enhances answer precision by asking whether an accurate

search can be conducted, and because of this, semantic retrieval that can judge textual similarity is

becoming ever more widely utilized alongside ordinary keyword searches.

The data utilized by generative AI includes information transmitted by media companies, and this

indicates the need for a debate on the coexistence of AI and the media. Recently, some media outlets

have begun to move toward blocking OpenAI from acquiring their articles. OpenAI has started paying data

usage fees to media companies, but there is rising concern that data usage fee payment and hoarding,

which depend on abundant capital, will make it difficult for competitors to enter the market and will

encourage monopolies of AI technology.

In terms of responses to these concerns, 2024 should see progress in the establishment of generative AI

legislation, guidelines, and datasets, as well as debate aimed at AI standardization.

Awareness of generative AI among Japanese people

In the “Survey of Workers on the Use of Generative AI” conducted by NRI in October 2023, the percentage of respondents with positive expectations for generative AI in Japan was 41.8%, significantly higher than the 22.8% with negative expectations. The sense of crisis about job loss is low in Japan, suggesting strong expectations for generative AI. However, respondent anxieties around generative AI revealed concerns about privacy and misuse, with 37.1% of respondents noting concerns about “Personal Information Breaches” and 33.9% concerned about “Reliability of Information”.

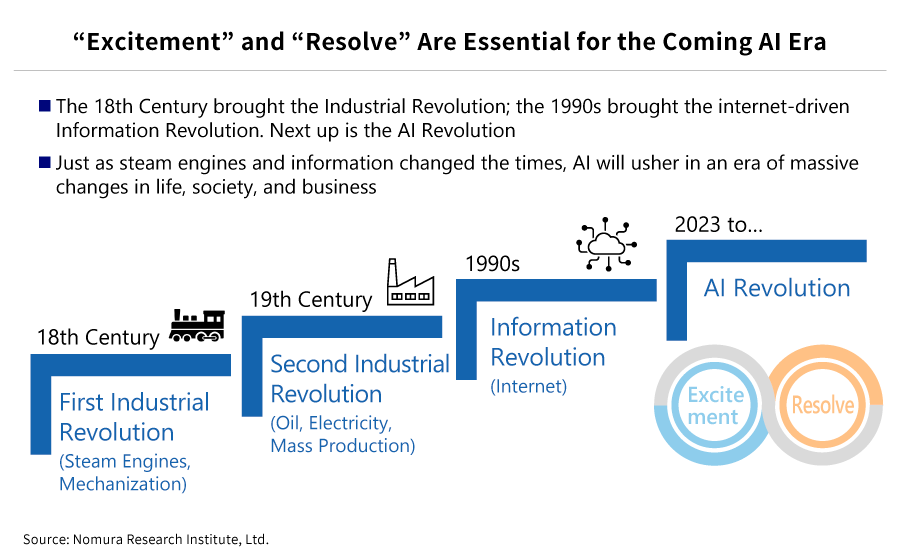

The global competition among data scientists includes many Japanese scientists among the top performers, suggesting high potential for Japan to incorporate and advance generative AI, and indicating a need for further acceleration looking ahead. Achieving this will demand not only promoting the technology’s advantages and fostering the expectations of everyday people, but also frankly communicating about the risks and other challenges. Expectations and challenges are an inseparable pair, and it is vital that generative AI be advanced in a safe and healthy way. The advancement of generative AI will mark a major inflection point building on the information revolution sparked by the internet. These changing times must be faced with an attitude of “excitement” and “resolve”.

- ※

Japan Electronics and Information Technology Industries Association, “Generative AI Market Global Demand Forecast”

https://www.jeita.or.jp/japanese/topics/2023/1221-2.pdf

Profile

-

Junichi Shiozaki

-

Yoshiaki Nagaya

-

Yoko Matsuzaki

* Organization names and job titles may differ from the current version.