How Will Generative AI Change Business?

The popularity of ChatGPT has brought attention to the business applications of generative AI. However, generative AI has its weak points and risks, and its adoption requires appropriate consideration and preparation. What should companies do to make use of generative AI in their businesses? We asked Takashi Sagimori and Yoshiaki Nagaya of the Center for Strategic Management & Innovation, who are experts on this topic, about the prospects and challenges involved from a technological perspective.

Expanding business use of generative AI, including ad creation and design

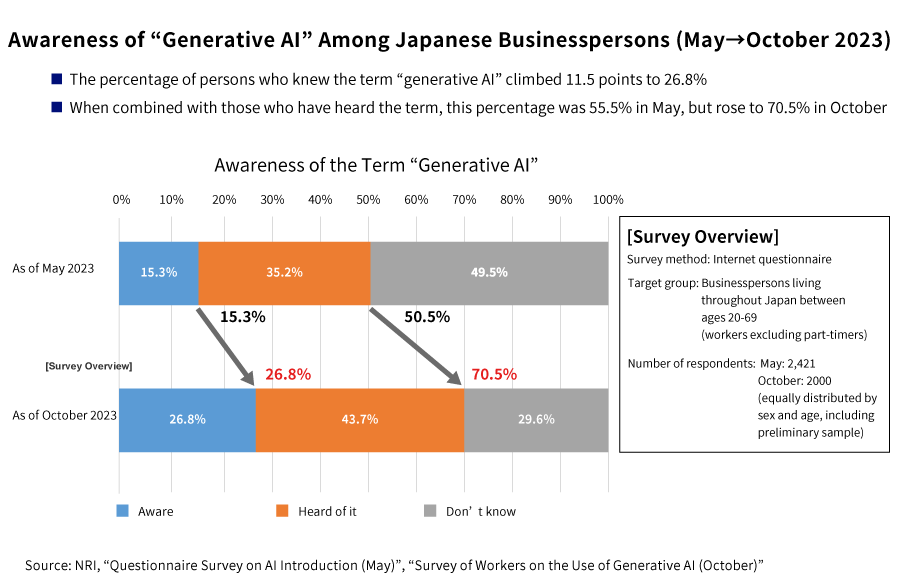

With the advent of generative AI, AI is now able to “create” new content such as text, images, and audio. According to a survey conducted by Nomura Research Institute (NRI) in May and October 2023, awareness of generative AI is rapidly developing among Japanese businesspeople. Whereas awareness was at 50.5% in May, just five months later in October, that number had jumped to 70.5%.

The size of the market for generative AI is expanding rapidly worldwide, and is expected to reach approximately 1.5 trillion yen in 2022 and around 21 trillion yen *1 in 2032. In conjunction with this, expectations for business use in Japan are growing, and introductions and trials have already begun, mainly in the IT, telecommunications, and education/learning industries. In addition to its use by companies for internal support tasks such as preparing manuals and meeting minutes, it is now also used for customers, mainly in creative realms such as advertisement production and office design. Some companies are using generative AI to offer unique new services, such as providing AI to generate original music or to propose product package designs using generative AI.

However, business use of generative AI also entails risks. The risks are more multifaceted now that AI, which used to “automate predetermined actions,” can now “create”. Learning data collected from the Internet may contain copyrighted or harmful content. In addition, a generative AI model may include other models in the development process. It is extremely difficult to trace the entire genealogy back to find out if it can be used commercially. Such copyright infringement is a prime example of the risks of generative AI.

Generative AI fundamentally is unable to give the response “I don’t know”. For that reason, it can sometimes repeatedly give answers that are inaccurate, which makes fact-checking absolutely essential in information retrieval applications as a measure to address such hallucinations. An issue that has become even more serious in recent years is that of “deep fakes”, or the creation and proliferation of false information by malicious users. Generative AI can easily produce extremely realistic images, videos, speech, and more. There has been a rash of examples in which people have exploited it to spread fake images and videos for fraudulent purposes. Given these circumstances, AI-related laws and regulations are being formulated both in Japan and abroad. In thinking about adopting this technology, companies should pay close attention to AI guidelines in Japan as well as legislative and regulatory trends overseas.

What is demanded of businesspersons in the era of generative AI is the capability to understand AI and to leverage it successfully for business. This means data literacy and a comprehension of basic AI principles and use methods. In addition, they likely also need the ability to ascertain the risks involved, to share them with other members, and to conduct training in countermeasures. They must gain these abilities by getting personally and directly involved in AI, and staying caught-up with the latest information.

Why is generative AI able to give “human-like” responses?

Generative AI is characterized by a natural feel as if one were interacting with a human. This is supported by Google’s large language model, Transformer, which it released in 2017. Until then, AI networks recognized words in a line in order and predicted what would come next. For example “Pleased to” would likely be followed by “meet you” . With Transformer, it can now recognize whole sentences at once and understand the relationship between each word. The ability to understand the relationships between words separated from each other has dramatically improved the accuracy of language recognition.

Transformer has also been effective in terms of learning efficiency. Because the structure enables parallel processing during learning, depending on computational resources, it can learn huge amounts of data that would have seemed impossible in the past. To improve the accuracy of AI responses, an AI must be prepared with a large amount of learning data in a form that facilitates learning. This is where self-supervised learning comes into play. Self-supervised learning does not require annotation because it mechanically generates questions to be filled in, etc. As a result, a large amount of data can be prepared in a short period of time.

With learning data and models suited for learning now widely available, generative AI models have been growing in scale at an accelerating pace. A typical example of this is the AI supercomputer built for OpenAI by Microsoft that features 10,000 GPUs. As a result, generative AI has become able to process a variety of tasks with only short prompts. Furthermore, ChatGPT has also mastered the ability to tailor its responses to individual preferences, such as conversational etiquette, sentence quality, and so forth. This sort of natural human-like interactivity that generative AI can display is the result of such technologies.

A future where AI thinks and acts on its own is at hand

On the other hand, generative AI also has problems not found in conventional AI. The biggest among them are the sizable costs and the lengthy processing times. Generative AI requires huge amounts of electricity and GPU equipment for learning and inferences, and it involves tremendous costs. Another problem is that compared to recognition-type AI (e.g., text recognition), the large models used mean slower responses, and the amount of learning data required is also rather massive, which means the content cannot be examined.

To solve these kinds of problems, generative AI will likely branch off going forward between large-scale, multi-functional models and models that offer limited features to save power. In June 2023, Microsoft Research introduced Phi-1, a model offering the same performance as GPT-3.5 despite its smaller scale. It has just 1.3 billion parameters, which is 1/100th that of GPT-3. In Japan, the scientific institute Riken is developing a language model customized to handle Japanese. And with other examples such as Code Llama, the code generation language model developed by Facebook’s parent organization Meta, we are seeing a new trend emerging around specialized models as opposed to larger-scale models.

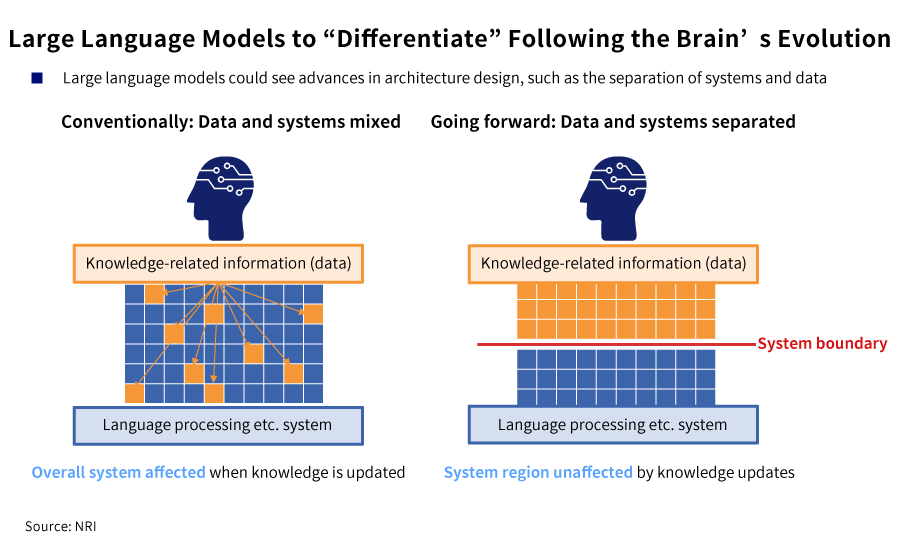

Generative AI entails systems in which language processing such as summarizing and semantic comprehension—in other words, systematic functions—and data such as academic knowledge are closely coupled together in a massive neural network. Therefore under the current circumstances, the infusion of new knowledge leads other mature functions to be degraded, meaning the system has poor maintainability. In terms of the possibilities going forward, we might also see advances in generative AI architecture, for instance an approach in which generative AI for handling language processing and generative AI for governing knowledge are separated and made independent and are only linked as necessary to generate solutions.

Although generative AI is constructed on computers, arithmetic and other such numerical processing is not its strong suit. That being the case, we are starting to see an approach being taken to generative AI in which instead of having it cover every single function itself, any deficient functions are “expanded” by separate systems. As users who have used the paid version of ChatGPT will know, the ChatGPT plugin has an “Advanced data analysis” feature. This links ChatGPT with the popular Python runtime as a data analysis language, and for simple data analyses, one can simply ask the system to do so, as if sending someone a casual email. Advanced data analysis could be regarded as a budding approach to having other systems expand those functions in which generative AI is lacking.

In the near future, generative AI will likely reach the point where it can create and then execute workplans on its own. In November 2022, Google announced the release of ReAct, a framework combining inferences and actions. This entails having the AI think and act by itself to achieve an objective set by a human being. Such AI can be thought of not as a simple automation tool, but rather as achieving growth into an autonomous AI agent.

What lies ahead presumably involves having multiple AIs interact together to derive even better solutions, an arrangement known as “Mixture of Experts” (MoE). Researchers at the University of North Carolina Chapel Hill published a paper in September 2023 titled “ReConcile: Round-Table Conference Improves Reasoning via Consensus among Diverse LLMs *2 ”, in which they successfully had multiple generative AIs converse with each other and derive solutions superior to what any one of them could have produced alone, demonstrating as it were the adage that “two heads are better than one”.

We think that going forward, generative AI will transform from “single” systems to the “multi-system” MOE model involving the coupling together of multiple AIs. This will not simply bring improved functionality, but we believe it will also have other effects. With a single massive model, it is difficult to know the details regarding how the system “thinks”. Even if one asks the generative AI to explain this logically, there is no guarantee that its response reflects its actual thinking process. However, if multiple AIs work and converse together in this exchange, their dialogue history will be logged, making it obvious to us how they went about solving a given problem.

In the future, we may see monitoring services emerge on the scene that monitor conversations between generative AIs to detect any performance abnormalities or any ethically undesirable utterances. Further, in terms of the links between generative AI systems (which are a matter of interest), we think the protocols will be actual “words”. The links between systems have been “mechanical”, involving combinations of data specifications to determine communication protocols. However, because generative AIs have mastered language, we believe they will become “human-like systems” making full use of words.

Current generative AIs come with numerous problems, and when it comes to their use for business, they are still a work in progress. Yet the underlying technology is advancing at a startling speed. Generative AI has a hidden potential to dramatically improve operational efficiency. Companies would likely do well if they understood its characteristics and the latest trends, and then consider how to leverage it for their own business operations.

- 1 Calculated based on an exchange rate of 1USD=140JPY (Market.US prediction)

- 2 https://arxiv.org/pdf/2309.13007.pdf

Profile

-

Takashi Sagimori

-

Yoshiaki Nagaya

* Organization names and job titles may differ from the current version.